One pattern stands out in many of my projects where my code tries to recognize data by its shape using clojure.spec.alpha/confrom. Once annotated, the conformed output goes into a recursive function that traverses and transforms it. With deeply nested data this results in confusing dispatches and destructuring on added tags of specs such as s/or.

However, my only goal is to transform the data, not to annotate it. Since conform knows what the matching spec is (assuming there is one) the transformation could happen right then and there. Yes part of this could be done with conformers but it’s not general enough as we’ll see below. Also, I remember reading that coercion is not in scope for spec. OK, please bear with me–we’ll get to that.

An Example

Specs and data we want to transform:

(s/def ::id integer?)

(s/def ::ref (s/tuple #{:ref} ::id))

(s/def ::entity (s/keys :req-un [::id] :opt-un [::child]))

(s/def ::child (s/or

:ref ::ref

:ent ::entity))

(s/conform ::entity {:id 2

:child {:id 3

:child [:ref 4]}})

; => {:id 2, :child [:ent {:id 3, :child [:ref [:ref 4]]}]}

Transformation function normalize:

Given a nested map of entities that point to other entities or refs we want to form a normalized graph keyed by refs that always point to entities which only ever point to refs of other entities. In other words we want to go from:

{:id 2

:child {:id 3

:child [:ref 4]}}

to:

{[:ref 2] {:id 2

:child [:ref 3]}

[:ref 3] {:id 3

:child [:ref 4]}}

Let’s imagine we have (defn spec-walk [f spec x] ...) which is similar to clojure.walk/postwalk yet different because a) it will return ::s/invalid if the data can’t be validated by the spec and b) instead of only providing f with the data at a given node it is also given the key of the matching spec :

(spec-walk (fn [spec-key x]

(println spec-key x)

[:transformed x])

::entity

{:id 2

:child {:id 3

:child [:ref 4]}})

; prints:

; ::ref [:ref 4] ; first and deepest spec call

; ::entity {:id 3 ; next up

; :child [:transformed [:ref 4]]}

; ::entity {:id 2 ; root entity

; :child [:transformed {:id 3

; :child [:transformed [:ref 4]]}]}

; notice the `s/or` keys are ignored.

; returns

;=> [:transformed {:id 2

; :child [:transformed {:id 3

; :child [:transformed [:ref 4]]}]}]

Good, spec-walk can walk our data and tell us which specs succeed along the way. It’s going to be a little ugly but we can imperatively achieve our normalization goal:

(let [out (volatile! {})]

(spec-walk (fn [spec-key x]

(case spec-key

::ref x

::entity (let [ref [:ref (:id entity)]]

(vswap! assoc out ref entity)

ref)))

::entity

{:id 2

:child {:id 3

:child [:ref 4]}})

@out)

; should return:

;=> {[:ref 2] {:id 2

; :child [:ref 3]}

; [:ref 3] {:id 3

; :child [:ref 4]}}

We could imagine another function like spec-reduce-replace that passes a context to f and expects it to return the optionally updated context and a replace value for the x:

(defn spec-reduce-replace [f init spec x])

(spec-reduce-replace (fn [ctx spec-key x]

(case spec-key

::ref [ctx x]

::entity (let [ref [:ref (:id entity)]]

[(assoc out ref entity) ref])))

{}

::entity

{:id 2

:child {:id 3

:child [:ref 4]}})

; should also return

;=> {[:ref 2] {:id 2

; :child [:ref 3]}

; [:ref 3] {:id 3

; :child [:ref 4]}}

OK, time to stop dreaming! Yes, spec-walk and spec-reduce-replace are probably not what spec was originally designed for. However, are specs, like clojure’s datastructures, extensible so that functions can be added for each type of spec? Let’s see:

Here’s the type of our ::id spec from before

(type (s/spec ::id))

;=> clojure.spec.alpha$spec_impl$reify__2069

from clojure.spec.alpha:

(defn ^:skip-wiki spec-impl

"Do not call this directly, use 'spec'"

([form pred gfn cpred?] (spec-impl form pred gfn cpred? nil))

([form pred gfn cpred? unc]

(cond

(spec? pred) (cond-> pred gfn (with-gen gfn))

(regex? pred) (regex-spec-impl pred gfn)

(ident? pred) (cond-> (the-spec pred) gfn (with-gen gfn))

:else

(reify

Spec

(conform* [_ x] (let [ret (pred x)]

(if cpred?

ret

(if ret x ::invalid))))

(unform* [_ x] (if cpred?

(if unc

(unc x)

(throw (IllegalStateException. "no unform fn for conformer")))

x))

; ... elided

))))

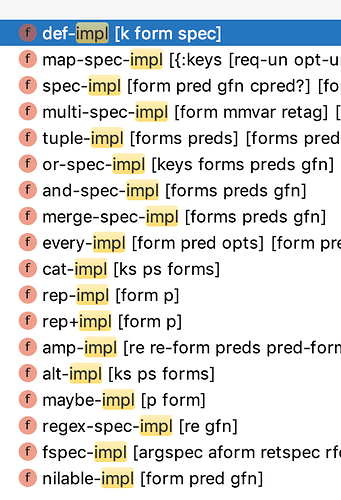

Every spec-impl, and there are many

uses

reify, creating objects implementing the Spec protocol on the fly. In order to extend these objects with another protocol (e.g. SpecTraverse with our methods spec-walk and spec-reduce-replace) each impl would have to be wrapped in a foreign namespace, with another implementation containing another reify that defines methods for SpecTraverse.

How does spec not support Clojure’s essential polymorphism?

AFAIU something like the is impossible with spec and spec2:

(defprotocol LookMagic

(magic! [this]))

(extend clojure.lang.IPersistentVector

LookMagic

{:magic! (fn [this] (conj this "Not exactly magic, but extensible it is!"))})

(magic! [1 2 3])

; => [1 2 3 "Not exactly magic, but extensible it is!"]

This seems incredibly backwards to me and I hope very much that someone will prove me wrong. As for the argument that coercion is not in scope for spec, that’s fine. The entire library ecosystem outside clojure.core was not in scope for clojure but it exists because Clojure can be composed and extended like hardly any other language.

Why is this not true for spec?