I certainly agree there is a use case where it makes better sense to release a library under a new name rather than just a new major version number. You example with honesql is a good one. However, I don’t see that as sufficient to justify not using semantic versioning. There is nothing in semantic versioning which prevents anyone from releasing a library under a new name and in fact, I have done this in the past when the library has changed so significantly it no longer resembled the original API or when I wantged to provide a more controlled or fine grained upgrade path (as you did with honeysql).

I also agree with the issue you raised regarding transitive dependencies. This is a problem and in fact, in my first draft response, I also included it as an example of one of the weaknesses in semantic versioning. However, I removed it, partly to make the response shorter, but also because I don’t believe the alternative approach of releasing the library under a new name addresses that issue either. In fact, I wonder if, in a gradual transition approach as you outlined with honeysql, could the situation be even worse as you are likely going to be loading two versions of tghe same transitory dependency II guess if all libraries stopped using semantic versioning and all libraries used a new name when releasing a new version, this may not be an issue, but I don’t see that as terribly likely).

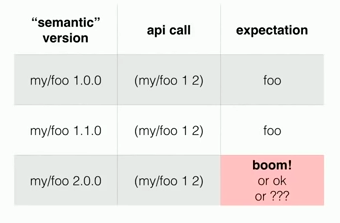

No, I do not consider semantic versioning to be broken. However, this goes back to my original point regarding expectations. I don’t have any expectation that semantic versioning solves the messy reality associated with updating dependencies. I don’t think there is any great solution. All we have are some practices which can help with assessing the likely impact of an upgrade. However, this is only a likely indicator, not a sold gold guarantee. All a semantic version number can really provide is a high level indication of what has changed in the version. It provides no guarantee regarding how the changes will impact your application. In fact, I wouuld argue that the real problem with semantic versioning is that people interpret the version as implying too much with respect to any applicaiton which uses it. Obviously, from a library pespecitve, you cannot imply anything regarding applicaitons which use your library. A libraries version number, semantic or otherwise, cannot imply anything regarding the applications which use it. It can only impart information about the library it is associated with. Anyone who is surprised to find their applicaiton is broken after upgrading a dependency which only had minor or patch/bugfix level changes i.e. from 1.0.0 to 1.0.1 or 1.1.0 is misinterpreting what a semantic version is telling them. It isn’t telling them that the changes are not going to break their applicaiton. It is only telling them that the changes either add new functionality or fix a known issue. As the major number hasn’t changed, it is also telling them that existing API signatures and return values have not changed ‘shape’. However, as the library author doesn’t know how you use the library, they cannot know precisely whether, for example, your applicaiton didn’t rely on the issue/bug which has been fixed.

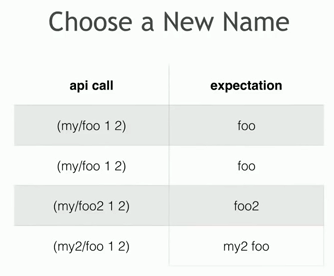

Version and dependency management is tough. I don’t think there are any short cuts. I find semantic versioning useful as it provides at a glance, some additional information which can help manage dependencies for my project. I don’t interpret semantic verisoning as providing any hard gurantee and approach all dependency updates as potential breakage regardless of the version number. A semantic version gives me a high level summary of the extent of change within a library and can provide me with some expectations regarding the effort associated with the upgrade, but implies no gurantees. The alternative is a version number which is bespoke for each library. It tells me nothing other than it is a different version. This isn’t necessarily a bad thing and I certainly do’t argue everyone should use semantic versioning. You should use whatever approach is right for your project (just be clear about that to avoid confusion and misaligned expectations). Likewise, I don’t think we should tell people not to use semantic versioning.

Like many things, the better solution likely lies somewhere in the middle. For exmaple, there is nothing preventing you from using semantic versioning and when it is appropriate, releasing a library under a new name with a new semantic version.